AI-Driven Knowledge Discovery: Advancing Biomedicine Through Automating Scientific Research

Scientific literature has increased at a rate of 8-9% annually since 1980, doubling every decade and creating an information volume no individual researcher can process effectively. This exponential growth has transformed knowledge management from a human-scale task to one requiring advanced computation.

The Biden Administration’s 2023 Executive Order on AI specifically addresses AI’s role in scientific research, while frameworks from the U.S. PCAST recommendations to the EU’s AI Act acknowledge the importance of reliable AI systems in advancing biomedical discovery. These initiatives recognize that AI has the potential to transform every scientific discipline and many aspects of the way we conduct science.

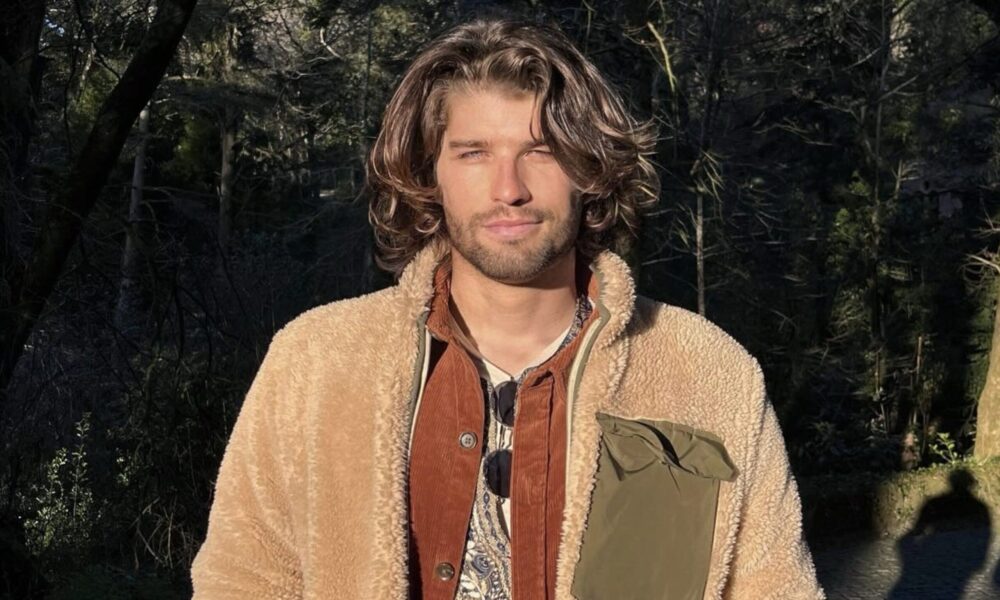

“Creating intelligent systems to understand the ‘original’ intelligent system – life – was likely going to be one of, if not the last, remaining frontier” explains Markus Strasser, a specialist in AI-driven knowledge extraction and biomedical research automation whose work has spurred much discussion in the fields of AI and life science automation.

Strasser’s work spans both tech and academia and addresses limitations in conventional information retrieval and representation – it focuses on the fundamental challenge of knowledge representation. “Symbolic knowledge graphs and ontologies often oversimplify the underlying structure of knowledge. I feel it might by definition defy structure entirely. Real scientific insight doesn’t fit into neat hierarchical taxonomies—it’s not a lattice. It’s contextual, elusive and embodied” he notes. This observation has informed his development of scientific NLP tools designed to capture the complex, interconnected nature of biomedical knowledge.

Funded by Emergent Ventures and working with organizations including Sage Publishing, Academia.edu, and Octopus.ac, Strasser has developed systems to extract, structure, and analyze biomedical literature at scale. This involves natural language processing pipelines that identify scientific entities (genes, diseases, chemicals), map their relationships, and construct explicit validated knowledge artifacts.

Through his work in automated knowledge, Strasser identified significant limitations in his research report The Business of Extracting Knowledge from Academic Publications. “Close to nothing of what makes science actually work is published as text on the web”, he writes, continuing to lay out how the most crucial knowledge in science is tacit – residing in experts’ embodied cognition, lab protocols, and trial-and-error know-how – rather than explicitly written in papers. This means an AI that only learns from published text is inevitably missing context.

This has led him to research approaches that integrate AI-driven analysis with experimental evidence rather than relying solely on academic literature. Unlike earlier systems such as IBM Watson for Drug Discovery, which faced commercial difficulties despite substantial investment, newer approaches align with more effective implementations that combine AI with scientific expertise. For example, BenevolentAI demonstrated this potential by identifying Baricitinib as a potential COVID-19 treatment through analysis of viral biology and drug data—a prediction subsequently confirmed in clinical trials.

AI-powered knowledge extraction in biomedicine

By structuring the unstructured – turning free-form text into typed graphs – knowledge systems aim to let scientists query knowledge in more expressive ways. Normally, writing a literature review or scanning for prior work is labor-intensive, but AI tools are accelerating this. There are now AI systems that can screen thousands of abstracts for relevance or extract data points from papers in hours, which would take a team of humans weeks.

According to a survey of recent techniques, AI models can support nearly every stage of a systematic review – from searching databases to extracting and synthesizing evidence.

Another example is DARPA’s ASKE program (Automating Scientific Knowledge Extraction), a U.S. government initiative that developed AI to help “interpret and expose scientific knowledge” from publications and even from model code, integrating that information into machine-curated models.

But it’s crucial to note that simply mining published papers for insights has, as Strasser notes, “negligible value in industry”. The approach, while technically fascinating, often fails to translate into real-world impact. Even well-funded institutes like Allen Institute for AI, with tools like Semantic Scholar, and other startups found it challenging to commercialize these technologies.

A knowledge app that draws only from papers is solving the wrong problem, whereas platforms that combine literature with diverse data (genomic data, clinical trial results, patents, etc.) can add real value. This lesson has guided many to refocus on hybrid approaches that ground AI-driven insights in experimental evidence and human expertise, rather than treating the academic literature as a standalone gold mine.

The endgame is a sort of automated research assistant that not only reads papers, but also keeps computational models up-to-date with the latest findings. The vision is to reduce the manual grunt work in science. For example, instead of manually tweaking a disease model when new biomarkers are discovered in literature, an AI could extract those findings and update the model automatically.

In daily lab work, AI-driven automation ranges from robot scientists that run experiments to smart lab notebooks that suggest next steps.

Mechanistic interpretability and AI transparency

Mechanistic interpretability is an emerging field in AI research that seeks to open up the “black box” of complex models (like deep neural networks) and explain how they work in terms of cause-and-effect mechanisms. Instead of treating a trained AI model as a mysterious oracle, mechanistic interpretability breaks it down much like a biologist dissects an organism – examining its internal structures, the function of each component (neurons or sub-modules), and how information flows to produce a given output.

Black-box predictions could lead scientists astray or even pose safety risks. As one report on AI in science notes, the opacity of AI algorithms “poses significant challenges to scientific integrity, interpretability and public trust”. If an AI proposes a new drug candidate gleaned from literature, a scientist will want to know why – which papers or data support that, and how the model combined them. Strasser’s background in philosophy and his work on machine learning interpretability connect here: “If the neural net says “gene x cures y” and we agree with its reasoning we can decide to test it. Whoever pays attention to the right signals, no matter if they come from an AI or other team members, will move in the right direction. AI transparency and interpretability becomes a topic when centralized authorities relegate their responsibilities to an ai systems without oversight.”

Recent research suggests using “discovery strategies familiar from the life sciences to uncover the functional organization of complex AI systems”. In practical terms, that could mean tracing which neurons in a neural network correspond to certain medical concepts or figuring out how a language model “decides” that a gene causes a disease. By peering inside the model, we can verify its logic and catch errors. Markus views this as essential not only for safety but for policy and governance of AI. If AIs are to inform scientific decisions or public health policy, they must be interpretable and auditable. This stance aligns with emerging AI governance principles.

For instance, the EU’s AI Act puts a strong emphasis on transparency and explainability in AI systems (though it smartly exempts AI used purely for research from some strict rules to avoid stifling innovation). Likewise, the Biden Administration’s guidance on AI in healthcare and research calls for algorithms to be transparent and their results reproducible. Mechanistic interpretability research is thus a key piece in bridging AI and policy – it provides the tools to prove that an AI-driven insight is grounded in valid mechanisms rather than coincidental correlations, biases or the many known hallucinations of large language models.

Implications for policy and research funding

The confluence of AI and science is increasingly influencing policy decisions and the allocation of research funding. The confluence of AI and science is increasingly influencing policy decisions and the allocation of research funding. When asked about AI’s role in policy, Strasser notes: “AI can make it easy to analyze large amounts of data to inform policy makers but it will also be used to fabricate false realities. I’d say the ratio is in favor of the former since, over time, reality has a strong signal. Still, we as scientists, artists and engineers have to come up with strong tooling, informational hygiene and habits to mitigate the downsides of free ranging omni-capable generative models. Promethean fire comes with a price.“

For example, funding bodies like the NIH or NSF could use AI analytics to detect which biomedical subfields are booming (maybe an under-the-radar area like CRISPR applications in neurology) or which important research questions are receiving scant attention. There are already moves in this direction.

The White House’s Executive Order on AI charges the President’s Council of Advisors on Science and Technology (PCAST) to report on how AI can “transform research aimed at tackling major challenges” – implicitly, this includes using AI to inform how we direct national R&D investments. We might soon see AI systems that scan global research outputs and advise governments on what moonshot projects could be feasible, or which collaborations to foster. In policy advocacy circles, the idea of an “AI index of science” has been floated – a dynamic indicator of scientific progress in different domains, maintained by AI.

Strasser’s research in automatically synthesizing research knowledge feeds into this vision. By structuring academic findings, his work could help decision-makers quickly grasp the state of evidence on a topic (for instance, summarizing all known mechanisms of a disease when planning a big research initiative). It also promotes evidence-based policy: when lobbying for funding in a certain area, having AI-curated data on hand – say, showing a network of all studies linking environmental factors to autism – can powerfully justify the need for investment.

Another policy aspect is how AI might make the distribution of funding more equitable and strategic. Agencies are likely to mandate that any AI used in evaluating research or allocating funds be auditable and bias-checked, to avoid perpetuating biases (e.g., favoring topics that AI has more data on, which might disadvantage novel or interdisciplinary ideas). We see this in the EU’s approach, which insists on “safety, transparency, traceability, and non-discrimination” for AI, even as it encourages innovation.

The broader landscape of AI-driven discovery in the life sciences

Similarly, startups like Insilico Medicine and Recursion are using AI to find new drug candidates by analyzing both text and experimental data (high-throughput screens, images, etc.). These firms blend traditional knowledge graphs with modern machine learning, and some have advanced new molecules into clinical trials at record speed.

Meanwhile, the rise of large language models (LLMs) like GPT-4 has introduced a new paradigm for knowledge discovery. LLMs are trained on vast swathes of text (including scientific papers) and can answer questions or generate hypotheses in plain language, mimicking a human researcher’s breadth of knowledge. This has enabled tools like Elicit and Galactica (an LLM prototype for science) which allow scientists to query the literature via conversational prompts.

The advantage of LLMs is their flexibility – they can synthesize information across disciplines and explain concepts in natural language. However, they come with notorious drawbacks: without careful constraints, LLMs may hallucinate false information or cite non-existent references. This is why there’s a trend toward hybrid systems that combine LLMs with reliable databases.

The industry landscape now features collaborations between big tech, pharma, and academia. Tech giants like Google and Microsoft are investing in AI-for-science initiatives (Google’s DeepMind demonstrated AI’s power with AlphaFold, solving the 50-year-old grand challenge of predicting protein structures.

Notably, a 2024 World Economic Forum report listed “AI for scientific discovery” as one of the top emerging technologies, noting that “AI has the potential to transform every scientific discipline and many aspects of the way we conduct science”. It cited examples from discovering new antibiotics to designing novel materials – achievements that were either impossible or prohibitively slow without AI.

In biomedical science specifically, AI is helping researchers connect dots that were previously too far-flung: linking genomic patterns to literature on metabolic diseases, or using image recognition to find disease markers that correlate with patient texts.

Going forward, some of the most promising developments include AI systems that can read not just text but also interpret experimental data in tandem. For example, an AI might read a cancer biology paper and simultaneously analyze genomic datasets to propose a unified theory.

Another exciting frontier is the concept of a “self-driving lab,” where AI algorithms iteratively design and run experiments (via robotics) and update hypotheses on the fly – essentially closing the loop from knowledge discovery to experimental validation. This is already being piloted in drug discovery and materials science. In the context of biomedical research automation, these advances hint at a future where scientific progress is significantly accelerated.

The role of the human scientist will increasingly be to guide the AI, asking the right questions and vetting the AI’s suggestions, rather than manually crunching data or digging through archives.

In conclusion, AI-driven biomedical knowledge discovery is transitioning from hopeful experiments to practical tools that are reshaping research. Markus Strasser’s contributions – from building literature extraction systems to dissecting large models internal reasoning circuits – reveal both the potential and limitations of this exciting new technology direction.

Source: AI-Driven Knowledge Discovery: Advancing Biomedicine Through Automating Scientific Research